Magdalena Breitinger

PRODUCT DESIGNER

FINAL MASTER PROJECT

TOUCH CONTROL is exploring the potential of including the touch channel into the interaction with user interfaces.

Since user interfaces have become a big part of our daily lives and more interactions are happening on the screen, the interface needs to evolve as well.

Nonetheless, most interfaces still rely on a visual interaction rather than including any of the other senses into the experience.

By adding haptic feedback to different elements of such user interfaces, this project is evaluating the acceptance and usability of haptic feedback.

The question is: How strongly can we relate on haptic feedback when interacting with our everyday user interfaces?

And: How do the elements of the user interface have to look like?

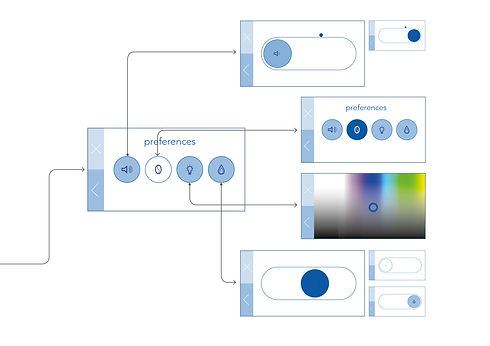

The outcome is both, a set of interface elements for the use of haptic feedback and, based on these elements a user interface for the smart home system Interactive Intentional Programming.

With the help of these interface elements, haptic feedback can be used to communicate additional information to the user and make the interaction with the system more intuitive.

Especially in situations in which the user might alter actuations contradictory to previous intentions or influence other peoples’ decisions in the smart home, haptic feedback is can be used to communicate the issues and contradictions. The interface can be displayed on both a stationary and a portable device.

CLAY MODEL

The first model was based on clay and acted as an initial way to take the interface and its elements into a 3D space. This model was based on the work conducted during the first semester of the project.

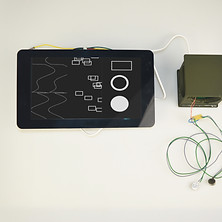

RASPBERRY PI

To take the interactions of the clay model onto a digital user interface, it was configured for the Raspberry Pi and improved from there.

USER TEST

The developed elements were transferred onto a portable device and tested on users.

The test explored different ways to include haptic feedback into an interface and how the participants reacted to this new interaction.

FOCUS GROUP

As a final step, the outcomes of the user test were implemented into a user interface. This interface was evaluated in a focus group, leading to the final design.

Design Space

The project is set within the area of the smart home and improving the experience when interacting with its interfaces.

The amount of smart home systems and products is constantly rising , leading to an increased number of people interacting with such products. This is making it necessary to simplify the interaction between user and system,.

Interactive intentional Programming (IIP) is tackling this issue by taking a novel approach towards the organization of smart home systems. Instead of simply taking commands, preset by the user, it generates actuation based on the ‘intentions’ of the smart home, such as ‘energy saving’, ‘security’ or ‘convenience’.

This way, it makes it possible for all users of the house to achieve a satisfying result in their actuations.

Nonetheless, it is necessary to allow the users to make changes if necessary when an actuation is taking place, which can be done within the ‘preferences’. While the ‘intentions’ and ‘scenarios’ will be set very rarely, the interaction with the ‘preferences’ can occur more frequently when minor changes in the routine arise or the user is not satisfied with the outcome . As a result, the interaction has to be as intuitive as possible while still communicating important information to the user. Especially with more than one person living in the smart home and the use of the novel principles of IIP, it can be challenging to not confuse the user with a very complex interaction. This is why this project is attempting to use haptic feedback to communicate such additional information to the user. In order to do so, the focus will be on the direct interaction between user and interface to identify the extent to which haptic feedback can be used to communicate IIP to the user.

Prototype an testing

Following the initial evaluation of the clay model, the elements were transformed from a 3 dimensional model onto a digital prototype. To do so, the elements were first programmed to include a visual and a haptic element.

The user test was the first main interaction between the potential user and the digital interface elements after the testing of the clay model. The outcomes of the test made it possible to build an interface around the users’ needs and wishes. Analyzing the results of both the pilot and the final test helped me to develop the project further in the area of user & society, especially through the qualitative data while the work with the quantitative data helped me develop my own skills in the area of math, data & computing.

Initial Exploration

During the first semester a clay model was built and tested on a several people. This model was meant to help to explore first shapes and interactions that could later be implemented into an interface. The three - dimensional element represented haptic feedback and its intensity before the actual coding began. The development of the clay model included a big amount of experimentation with these shapes and interactions. The process introduced a freer element to my design process and added a new method to my exploration of the area of Creativity & Aesthetics.

In the beginning of M 2.2. the qualitative test was analyzed and the possible elements were translated into the two-dimensional space of an interface. Furthermore, first interactions were designed which were based on the feedback of the participants.

Focus Group

Since the development of the user interface also included the addition of certain features and a context to the interface elements, it was necessary to test them in a final instance. During this test, the functionality of the elements in different situations, especially in connection to IIP had to be evaluated. The main objective was to explore the usability of haptic feedback showing contradictions, two dimensional sliders and multi user scenarios. Each of these objectives has been realized in an element of the interface with the speaker element showing a contradiction with the intention of privacy, the light control mixing different kinds of haptic and visual feedback and the heating slider representing a multi user scenario. During this test, any errors in the interaction with the interface should be identified and any misunderstandings solved afterwards.

MOBILE INTERFACE

STATIONARY

INTERFACE